Your CX team just presented to the board. The quarterly NPS dropped 8 points. The CEO wants to know why.

You pull up the survey data: 347 responses out of 6,800 customers. The open-ended comments mention "billing confusion" 23 times and "poor support experience" 31 times. That's all you have.

What you don't know: Your contact center handled 12,000 customer conversations that same quarter. Billing issues appeared in 2,400 of them, not the vague "billing confusion" from surveys, but specific friction points like "can't update payment method on mobile" and "charged twice for annual renewal." Support quality complaints weren't about agent rudeness; they were about agents lacking the authority to issue refunds, forcing customers to go through three escalations for simple requests.

The intelligence needed to fix your NPS problem exists. You're just operating with 95% blind spots.

This isn't just a survey problem - it's an organizational problem

Let's be specific about what's happening across your business right now:

In your support center, agents handle 2,000 calls per week. Your QA team manually reviews 20 of them, exactly 1%. Last month, a billing error affected 180 customers before anyone noticed. The pattern was visible in call transcripts from day three, but your 1% sampling missed it entirely. By the time escalations reached leadership, you'd already lost 12 customers and damaged relationships with 60 more.

In your product team, the roadmap debate centers on whether to invest in mobile app improvements or checkout flow optimization. The product has survey data showing 200 customers requested "better mobile experience." What they're missing: 8,000 chat conversations contain detailed mobile app friction, specific features that don't work, exact points where users abandon tasks, and clear comparisons to competitor apps. The decision gets made on instinct, and whoever speaks loudest, not based on comprehensive customer intelligence.

In your executive meetings, the CFO questions your $500K CX investment. You present before-and-after survey scores showing a 4-point improvement. They want to know what specifically drove the change and whether it's sustainable. You can't answer with confidence because you're measuring impact through 5% of customer reality. The conversation ends with "let's revisit this next quarter", code for "prove this actually matters."

This plays out thousands of times across organizations. Strategic decisions are made on incomplete data. Problems were discovered weeks after they started. Investments that can't be proven. All while the complete intelligence sits unanalyzed in customer conversations.

The hard truth about survey-based intelligence

Many of our customers report that email surveys now achieve response rates below 5%, with some as low as 1%. But the bigger issue is bias.

Survey respondents systematically differ from non-respondents. Dissatisfied customers are less likely to complete surveys, meaning your data captures only a fraction of customer reality and skews toward the satisfied minority. You're not getting representative intelligence; you're getting the people motivated enough to respond.

Meanwhile, conversation volume is exploding in the opposite direction. Support conversations contain 8-12 discrete pieces of business intelligence: reason for contact, satisfaction signals, product feedback, process friction, resolution outcome, emotional journey, agent effectiveness, and cross-sell opportunities.

The gap between what customers are telling you and what you're actually hearing has never been wider.

Why conversation data has been unusable (until now)

The problem isn't that organizations don't have conversation data. Most have years of it stored across contact centers, chat platforms, email systems, and CRM tools. The problem is that conversation data exists in forms that are inherently inaccessible for analysis.

Reason 1: The email problem

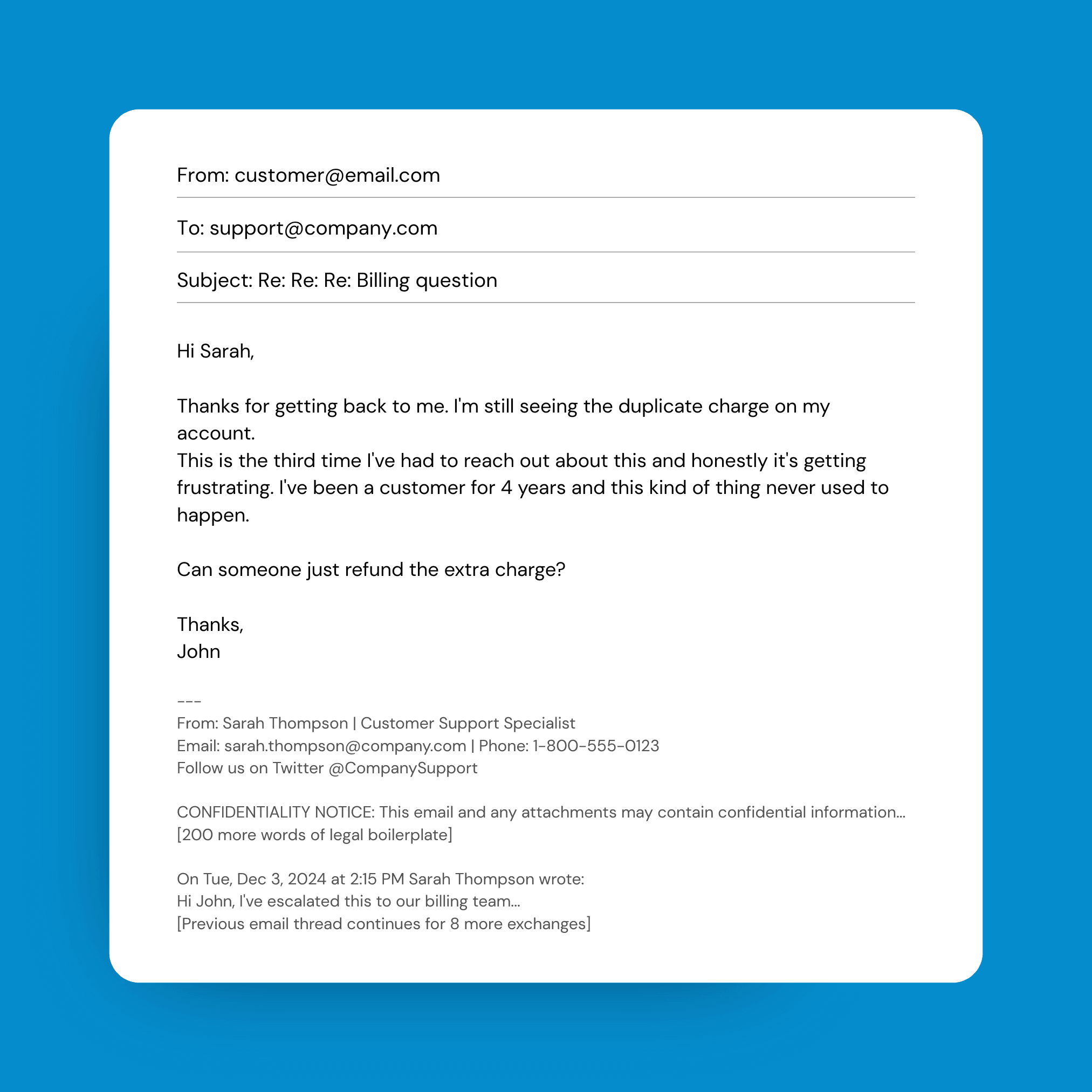

Take a typical email thread between a customer and support. Here's what the raw data looks like:

The actual customer message is 4 sentences buried in threading artifacts, signatures, legal disclaimers, and process history. Multiply this by 10,000 email threads per month and you have an impossible manual analysis task.

Reason 2: The chat transcript problem

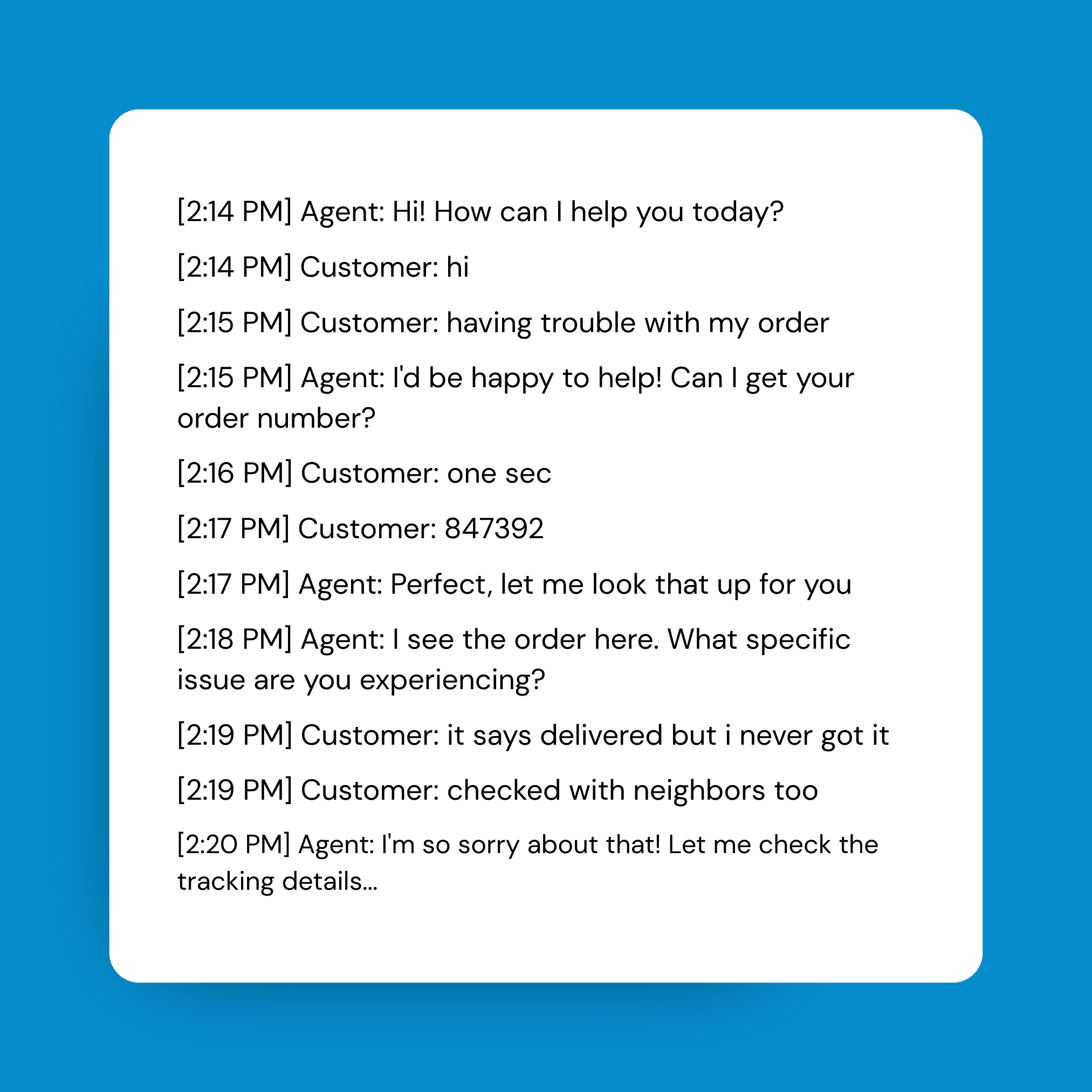

Chat conversations present the opposite challenge, they're too long and meandering:

The actual issue, missing delivery, doesn't surface until the 10th message in a conversation that ultimately runs 40 exchanges. The critical business intelligence (delivery failure, carrier issue, customer frustration level, resolution outcome) is scattered throughout a 15-minute transcript filled with pleasantries and process steps.

Reason 3: The audio recording problem

Support calls are even more challenging. An 18-minute call might contain:

2 minutes of hold music and IVR navigation

3 minutes of verification and account lookup

1 minute of small talk while the agent pulls up systems

4 minutes of the customer explaining the issue (with tangents and background)

5 minutes of troubleshooting and back-and-forth

2 minutes of resolution and closing

1 minute of post-call survey prompt

The actual reason for contact, satisfaction level, resolution status, and product feedback exist somewhere in those 18 minutes. But extracting it requires either:

Manual listening and note-taking (impossible at scale)

Expensive transcription with human review (too slow and costly)

AI transcription without structure (gives you searchable text but not analyzable intelligence)